SecOps Process Framework for Automation and AI

Did you know that refrigerators and cyber incident response plans can share an ISO standard?

“Automate the boring stuff.” “Replace your Tier 1 SOC with AI.” When SecOps teams think about process management, it’s often from the lens of automating toil within processes: eliminating the need for humans to perform low-value, time-intensive tasks, thus freeing up those humans to perform more creative work that can’t be automated.

That said, whether you’re using manual runbooks, SOAR, hyperautomation, agentic AI, or a new automation buzzword that hasn’t been invented yet, high-performing security teams know that not every process can or should be treated equally. Sometimes the effort to automate a task outweighs the value provided by removing it from a human’s plate.

Picking the right kind of automation matters as well. Automation can be deterministic (where the same inputs will always lead to the same outputs, like a Python script) or probabilistic (where the same inputs can have some variation in output, like a large language model).[1]

My mental framework for figuring out the right approach to managing SecOps and SOC processes involves weighing out the complexity, frequency, criticality, and tolerance of that particular workflow.

Manufacturers have this one simple trick

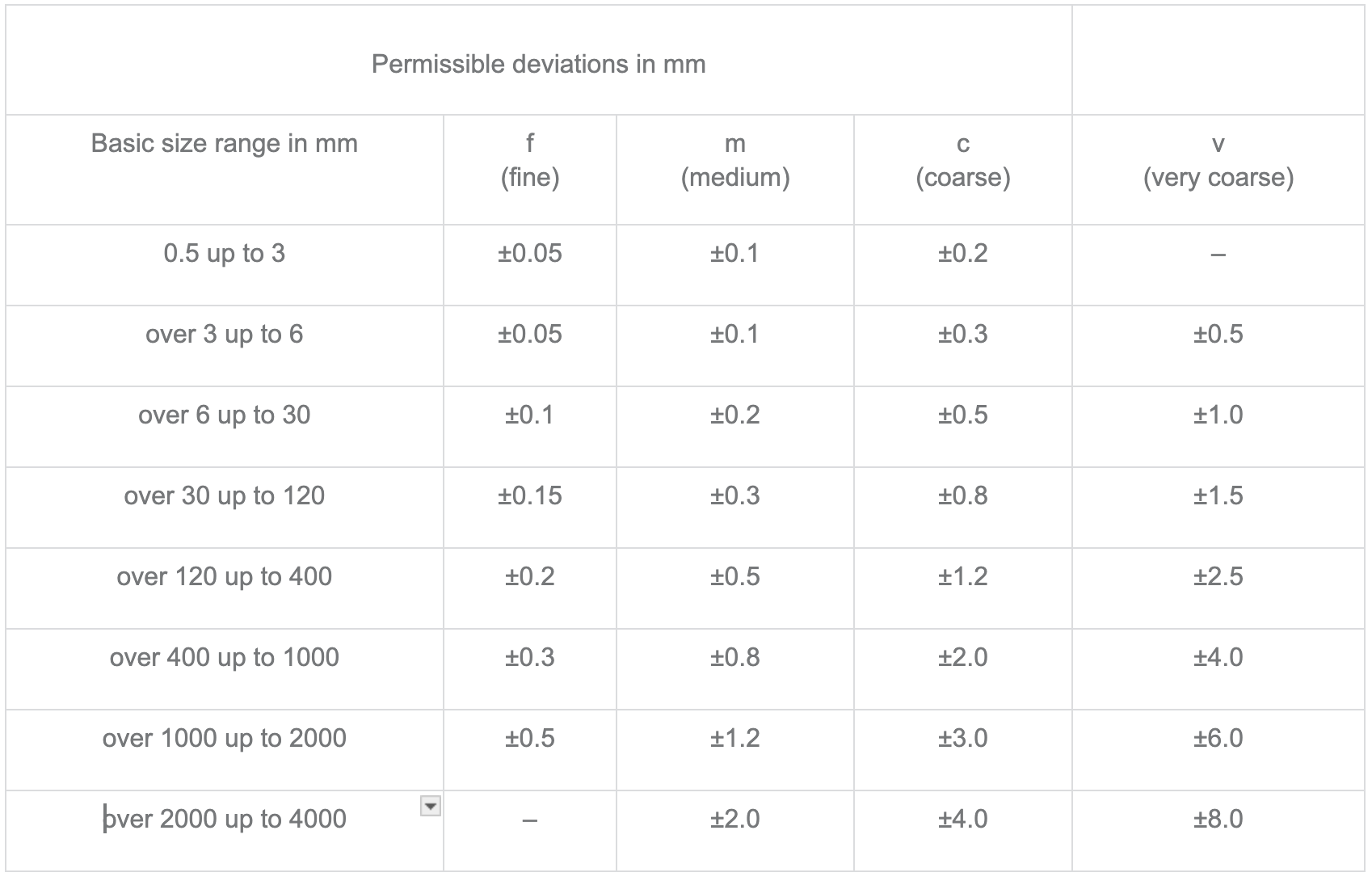

Believe it or not, cybersecurity isn’t the only field that has processes. Manufacturers have had to solve the problem of “ensuring that all these parts will fit together at the end” for over a hundred years now. And where they’ve landed is the ISO 2768 standard for machining tolerances - in other words, how much variation is acceptable when drilling holes, cutting metal, and so forth.

To gloss over a LOT of detail, when doing bulk manufacturing it’s virtually impossible to make every piece of output exactly the same. If you need to drill two holes in a piece of sheet metal that are designed to be 120 mm apart, sometimes they’ll be slightly less than that, and sometimes slightly more. So how much less or more is acceptable?

In plain English, if I said “I need two holes drilled into this sheet metal 120 mm apart with medium tolerance,” the manufacturer could produce a piece of sheet metal with two holes that were somewhere between 119.7 and 120.3 mm apart - anything more or less than that range would be considered unacceptable.[2]

In other words:

- The more precision you want, the more expensive the manufacturing process

- The larger the thing you’re machining, the more deviation is tolerated

There are a lot of parallels here for cybersecurity! It takes a lot of time and effort to make a SOC process run exactly the same way every single time, whether via a runbook that needs to be kept up to date, or an automation that requires hundreds of exact steps. And bigger processes (like responding to an incident) will inherently have more variations than smaller ones, due to their complexity.

Adding in the other factors

Okay, that’s enough manufacturing discussion for the time being. What about the other attributes of a SOC process?

Complexity

There’s no hard and fast boundary that turns a simple process into a complex one. In general, though:

- A simple process will have fewer steps than a complex one

- A simple process has no (or very few) logical decision trees

- A simple process will generally progress from beginning to end in a straightforward way

- A simple process has no sub-processes, while a complex process is often made up of multiple sub-processes that can be simple or complex themselves

“Use an API to look up IP address geolocation” is a simple process. “Respond to an incident” is a highly complex process, and one that is often broken down into sub-processes. The more complex a process becomes, the more deviations we should naturally expect.

Frequency

I don’t think you need me to define the concept of frequency for you. The only thing I’ll note is that it’s important to keep track of the outside factors that influence the frequency with which you need to execute a given process. For example, “someone has a question for the security team” scales pretty linearly and predictably with the employee population.

Criticality

Much like complexity, the criticality of a process is subjective. But in general, criticality is driven by one of two things:

- The importance of the process outcome. For example, if you need to lock a compromised account during an incident response process, it’s pretty darn important that the correct account gets locked, and that the locking mechanism works reliably.

- The importance of the underlying system. Misconfiguring a firewall rule for a production system can cost millions of dollars in lost revenue if it accidentally blocks legitimate access.[3]

Either way, when a process is mission-critical, our tolerance for deviation from the designed outcome is dramatically lower.

Putting it all together

We now have a taxonomy for describing SOC processes, so how should we think about applying our resources to them?

People vs AI vs Code

Humans are incredibly adaptable problem solvers. We can think and operate across system boundaries and reason about how to properly handle unfamiliar situations. Unfortunately, humans get tired. We make mistakes. We get cranky and hungry. Sometimes we burn out.

AI doesn’t get tired, cranky, or hungry. But it can definitely make mistakes, and needs to be explicitly fed a lot more information than a human being does to determine a contextually-correct answer to a problem.

As Anthropic says about their AI models:

When interacting with Claude, think of it as a brilliant but very new employee (with amnesia) who needs explicit instructions. Like any new employee, Claude does not have context on your norms, styles, guidelines, or preferred ways of working. The more precisely you explain what you want, the better Claude’s response will be. [...] Even the most advanced language models, like Claude, can sometimes generate text that is factually incorrect or inconsistent with the given context.

Unlike an AI model, code (and I’m using “code” loosely here to mean everything from hand-written Python scripts to automation platforms that support a no-code UI like my employer, Tines) will do exactly what you tell it to do, every single time. You can write tests for code, change it in predictable and controllable ways, and so on. The tradeoff is that it takes more time (and sometimes more technical expertise) to achieve the precise outcomes you’re looking for.

Practical Scenarios

Scenario: Running an employee-facing security service desk

| Complexity | Frequency | Criticality | Tolerance | Recommendation |

|---|---|---|---|---|

| Low | High | Low | High | Delegate to AI |

Many SOC and SecOps teams are tasked with being the front-line, employee-facing part of the security organization. The task of answering basic cybersecurity questions, or escalating requests to other parts of the security org, is not particularly complex, happens frequently, and has a comparatively high tolerance for process variance - after all, if someone receives a mis-escalated ticket, they’ll just send it right back. From a human perspective, this process is generally considered toil. That makes it a great candidate to send to an AI model to automatically run triage.

Scenario: Performing incident response

| Complexity | Frequency | Criticality | Tolerance | Recommendation |

|---|---|---|---|---|

| Extremely High | Low | High | Medium | Break into sub-processes |

Responding to a confirmed incident is inherently a complex process - so much so that it’s worth breaking down into sub-processes. No two incidents are ever exactly the same, which means human creativity is absolutely required. It also means that attempting to overly reduce variance, whether through runbooks or automation, is a well-nigh impossible task that can lead to its own bad outcomes (see Matt Linton’s post on professionalizing vs. proceduralizing incident response teams)

Scenario: Isolating a compromised system as an incident response step

| Complexity | Frequency | Criticality | Tolerance | Recommendation |

|---|---|---|---|---|

| Medium | Low | High | Low | Execute via code |

High-criticality processes that have low tolerance for process variation should be executed via deterministic workflows. A human might make the decision that a system needs to be isolated (and that decision might even stem from an AI assistant’s suggestion!) but the stakes are too high to risk a non-deterministic process locking the wrong system, or missing a step and leaving the compromised system online.

In Conclusion

Process management is about more than just eliminating toil, or trying to replace humans with AI everywhere. As Jake Williams once said,

While I can’t run a SOC with no tooling, I’ll take one with less tooling and more process over one with more tooling and less process every day of the week.

With today’s SecOps teams having more responsibilities and less time than ever before, it’s vital to adopt a strategy to optimize your team’s implementation of AI and automation.

[1] Before you take out your pitchforks, I’m very much aware that (a) you can create a Python script with randomized outputs, and (b) that I just called LLMs a form of automation. Would you honestly have been happier if I called Python scripts a form of AI?

[2] In all reality, I’m sure every manufacturer would first start by laughing at me for butchering that description, and then they would produce the sheet metal I requested.

[3] Please don’t ask me how I know this from personal experience.